Lupine Publishers Group

Lupine Publishers

Menu

ISSN: 2641-1709

Review Article(ISSN: 2641-1709)

Hearing Assessment and Training in Immersive Virtual Reality; Clinical Model and Validation with Cochlear Implant Users Volume 8 - Issue 4

François Bergeron1*, Smita Agrawal2, Arthur Lemolton3, Thomas Fariney3, Dominique Demers1, Aurore Berland4 and Bastien Bouchard5

- 1Director, Audiology Graduate Program, Faculty of Medicine, Université Laval, Québec, Qc, Canada

- 2Principal Research scientist, Advanced Bionics Corporation, Los Angeles, California, USA

- 3Master Applicant in Neuroprotheses sensorielles et motrices program, Université de Montpellier, Montpellier, France

- 4Université Jean Jaurès, Toulouse, France

- 5Technologies Immersion, Québec, Canada

Received: June 21, 2022; Published: July 01, 2022

Corresponding author: François Bergeron, Director, Audiology Graduate Program, Faculty of Medicine, Université Laval, Québec, Qc, Canada

DOI: 10.32474/SJO.2022.08.000292

Introduction

Reduction of auditory disability is usually the primary objective of technological and therapeutic interventions offered to hearing impaired children and adults. Numerous tests are proposed to guide these interventions and assess their benefits. Optimally, hearing care-oriented tests should explore complex abilities such as those encountered by hearing impaired people in their daily life, especially since contemporary hearing aids and cochlear implants incorporate sophisticated signal processing to support auditory perception in diverse complex everyday environment. Nevertheless, contemporary routine clinical assessments targeting performance in real-life mostly rely on word or sentence recognition tasks administered in a controlled environment (such as a sound booth), in quiet or in a standardized speech spectrum noise issued from fixed positions, with one or two sound sources, often with arbitrarily predetermined signal to noise ratios. For example, the Hearing In Noise Test [1] is based on the repetition of sentences against a 65 dBA speech spectrum noise coming from the front, right or left side. The test has been standardised as an adaptive procedure to determine a speech reception threshold (SRT); however, in clinics and in research, it is frequently administered with predetermined signal to noise ratios (+10, +5, 0 dB). While sentences can approach realistic daily stimuli, one can argue that a speech spectrum noise issued from fixed positions with variable or arbitrarily predetermined signal to noise ratios can be far from real life. Similar environments are often used for rehabilitative care, either for hearing aid fitting or for auditory training therapy. Indeed, standard audiological care still has profound roots in a diagnostic-oriented context.

Now, according to the Disability Creation Process from the Human Development Model [2], assessing disability relies on the analysis of the interaction between the person and his environment in the context of his own life habits. Therefore, in a disability reduction objective, environments and life habits should be part of hearing assessment and care. Such an ecological approach to care is reflected in recent administrative rulings: the 2018 decree on hearing aids by France’s Haute Autorité de Santé stipulates that tests reproducing real-life situations should be used to validate the delivery and efficacy of hearing care services; the Quebec’s professional code stipulates that the assessment of hearing functions should target the improvement or restoration of communication of the human being in interaction with his or her environment.

Virtual reality (VR) and immersive environments are lines of research which can be applied to numerous scientific and educational domains [3]. VR provides the opportunity for enhancing and modifying the learning experience of healthcare professionals through immersion in a non‐real environment that closely mimics the real world [4]. A unique feature of VR / immersive environments is that users can experience different situations in the VR system without physically leaving the clinic. Thus, VR offers a unique opportunity to address hearing care under an ecological perspective; indeed, some researchers explored this opportunity. Revitronix and Etymotic Research Lab jointly developed the R-SPACE research sound system [5] from the principles of immersive reality. The system is based on recording and playback equipment. The recording set includes eight directional microphones set in a circular array, two feet from the center and pointing outward at every 45° angle around a 360° circle. In this way, environmental sounds are picked up from many horizontal directions on their way to the listening position. These direction-dependent environmental sounds are recorded on eight separate tracks of a digital recording system so that they can later be played back in the laboratory. The playback system consists of eight loudspeakers similarly positioned as the microphone array but facing inward. The directiondependent sounds that were recorded earlier in a real environment (i.e., in a restaurant) are thus allowed to complete their natural paths to the listener, but in a different time and place (i.e., in the laboratory). Previous validation studies had shown that an eight sources system (recording microphones / playback speakers) would allow directional hearing aids and the hearing mechanism to perform in the lab as they do in the real world [6]. Pilot validation studies confirmed the realism of the reproduction and the accurate prediction of real-world speech intelligibility over a wide range of directional devices [7] in a lunchroom and a restaurant simulations.

Weller et al. [8] exploited the virtual sound environment (VSE) of the Australian Hearing Hub to assess listeners’ performance in analyzing a continuous spatial auditory scene. The intent was to reproduce a scenario that is relevant in daily life, where accurate localization may be important for listeners following a conversation between multiple talkers to spatially focus their attention on the active talker and ignore other talkers. In this context the ‘‘spatial analysis’’ task may relate to the ability of a listener to participate in dynamic multi talker conversations. The VSE consisted of an array of 41 loudspeakers arranged in horizontal rings at different elevation angles covering the surface of a sphere with a radius of 1.85 m. 96 different acoustic scenes were generated, each containing a mixture of between one and six concurrent talkers in a reverberant environment. Results showed that “…listeners with normal hearing were able to reliably analyze scenes with up to four simultaneous talkers, while most listeners with hearing loss demonstrated errors even with two talkers at a time. Localization performance decreased in both groups with increasing number of talkers and was significantly poorer in listeners with HI. Overall performance was significantly correlated with hearing loss.” The authors concluded that the virtual sound environment is useful for estimating real-world spatial abilities.

Lau et al. [9] were interested in the interaction of dual tasks on word recognition in age-related hearing-impaired participants. To do so, they took advantage of VR to produce a controlled and safe simulated environment for listening while walking. Seven loudspeakers and a subwoofer were located behind a visual projection screen. The center loudspeaker was positioned at head height at 0° azimuth and the other six loudspeakers were distributed in an array in the same horizontal plane at 45° and 315° azimuth (right front and left front), 90° and 270° azimuth (right side and left side), and 135° and 225° azimuth (right rear and left rear). The subwoofer was located at ground level below the center loudspeaker. Each loudspeaker was positioned at a distance of 2.14 m from the participant. The auditory environment consisted of ambient street and traffic sounds that matched the visual simulation of the street scene. Talker speech could come from 0°, 90° or 270° azimuth. As expected, word recognition accuracy was significantly lower in a hearing-impaired group of older adults than an age-matched normal hearing group. . Both groups performed better when the target location was always at 0°. Normal hearing group demonstrated a surprising dual-task (listening and walking) benefit in conditions with lower probability of target location (0°, 90° or 270°).

The authors concluded that “… testing in a more realistic, complex, ecologically valid VR environment using a multitasking paradigm complements and extends the traditional laboratorybased research and impoverished clinical tests conducted in sound-attenuating booths. For instance, past research evaluating HA effectiveness has focused on increasing acoustic fidelity and measuring outcomes in terms of speech understanding in noise, or self-reported benefit/satisfaction. By introducing new outcome measures related to listening in more complex and dynamic conditions, as well as considering the consequences on mobility, this research can provide a more holistic perspective on how HL and HAs may affect the quality of life in older adults.” Grimm, Kollmeier & Hohman [10] used complex algorithms to generate virtual acoustic environments (street, supermarket, cafeteria, train station, kitchen, nature, panel discussion) in arrays of about 50 speakers in order to quantify the difference in hearing aids performance between a laboratory condition and more realistic real-life conditions. They observed that “… hearing aid benefit, as predicted by signal-to-noise ratio (SNR) and speech intelligibility measures, differs between the reference condition and more realistic conditions for the tested beamformer algorithms. Other performance measures, such as speech quality or binaural degree of diffusiveness, do not show pronounced differences. However, a decreased speech quality was found in specific conditions. A correlation analysis showed a significant correlation between room acoustic parameters of the sound field and HA performance. The SNR improvement in the reference condition was found to be a poor predictor of HA performance in terms of speech intelligibility improvement in the more realistic conditions.”

Some more studies can be found in the literature featuring the use of VR to generate acoustic immersive environments [11-13] Berry et al., 2016; Best et al., 2016; Oreinos & Buchholz, 2016) with the recurrent conclusion that the high realism of acoustic scenes generated by these systems can be exploited to assess real-life hearing perception and device performance or perform auditory training activities. Some applications relied on a limited number of speakers, while others used large arrays of speakers; some applications set a limited number of auditory scenes, others introduced a diversity of daily-life environment. But all were designed as research-based applications; none were developed from a clinical point of view. Yet, disability assessment remains in the hands of the clinician and research has shown that access to VR immersive tools would be quite useful to achieve this task. This project explores this avenue. There were three phases in the project, each aiming at a specific objective, that is:

a) Design a system that can virtually reproduce everyday sound experiences in the clinic and thus offer a more realistic testing condition for the clinician to assess auditory perception in hearing impaired children and adults, namely localization abilities and speech perception.

b) Specify the psychometrics of the system and define norms with normal hearing individuals.

c) Validate the concept through experimental studies in…...

Phase 1: Development of the Immersion 360 System

The Immersion 360 system has emerged from a pilot project on a prototype system where the feasibility and the relevance of creating virtual environments to assess auditory perception has been confirmed [14]. At this time, experiments showed that altered capacities for speech perception could be observed in a virtual environment despite excellent performance in a standard clinical setting at a similar signal to noise ratio. Changing the environment affected the performance, even when signal to noise ratios were kept constant. Therefore, the typical clinical test condition did not seem to correctly replicate real-life functioning, which is characterized by diverse soundscapes where conversation to noise ratios are in the +3 to +5 dB range in order to maintain intelligibility [15]. From this foundation, the Immersion 360 system was developed to virtually reproduce common daily sound experiences with realistic noise types, incoming directions (including moving sources), and signal to noise ratios in order to assess auditory perception of hearingimpaired persons in realistic test conditions. As this system relies on multiple discrete source positions, localisation capacities can also be assessed with the same equipment.

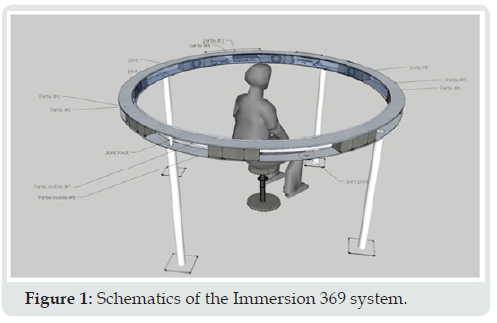

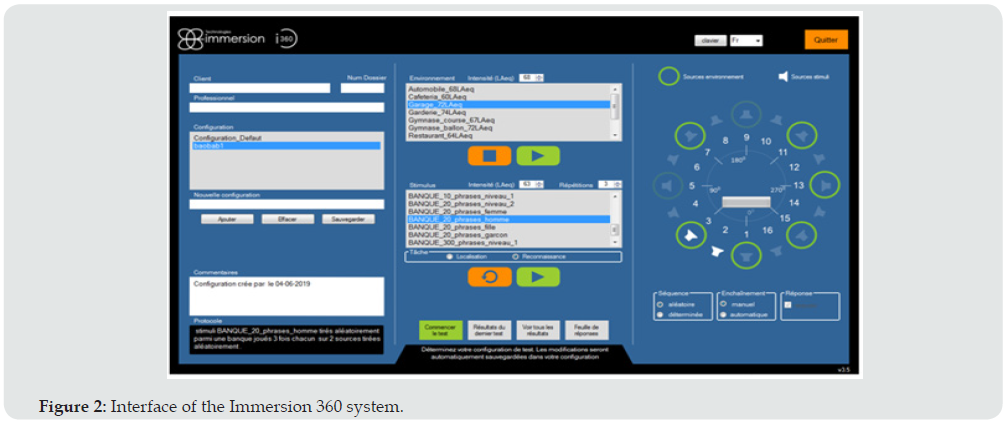

The actualized version of the Immersion 360 system consists of 8 point-source full-spectrum speakers and 8 virtual speakers forming altogether 16 sound sources. These sources are placed inside an aluminium ramp at equal distance one meter from the center of a 3600 horizontal arc (Figure 1). An 8 speakers’ configuration was selected to facilitate implementation in clinics (compared to arrays of 20 to 50 speakers); findings from Revit, Schulein & Julstrom [6] validated that this configuration is sufficient to allow the hearing mechanism to perform in the lab as it does in the real world. The implementation of virtual sources increases the resolution of the system despite a limited number of actual sources, allowing more precise measures of angle errors for auditory localisation in a system based on a limited number of point-source speakers. A meticulous calibration of each source, physical and virtual, ensures a homogeneous loudness across the speaker array. The 2 meters inside diameter of the system is sufficiently large to accommodate two persons for hearing training purposes while not too large to stay in the near field of the speakers and mitigate the room’s acoustic influence.

Following suggestions from experienced clinical audiologists, nine common challenging virtual environments were incorporated in the Immersion 360 system to assess speech perception in daily settings. These environments are in a moving car, in a gymnasium where a ball game or a race training are performed, at the cafeteria, at the restaurant, in street traffic, in highway traffic, at the car mechanic’s shop, and at the kindergarten. Some of these environments (in a car, on the street, meeting in a crowded place such as a restaurant) were also identified in the Wolters et al. [16] study on common sound scenarios encountered daily and in the Dillon et al. [17] study on measuring outcomes of a rehabilitation program. The environments were recorded live in locations using 8 microphones set on the same configuration of the 8 point-source speakers of the system. Noise level of each environment and live conversational speech level in each environment were recorded in order to determine the presentation and the signal to noise levels when reproduced in the Immersion 360 system. Test parameters (selection of environments and stimuli, levels and signal to noise ratios, active sources, number and randomisation of presentations) are all regrouped on a one-page interface in order to ease access to the clinician (Figure 2). Motorised legs can raise or lower the entire system depending on the listener’s height while sitting (e.g higher position for adult assessment, lower position for auditory training with a child). Clinically oriented user interface, virtual speakers, multiple integrated environments, adjustable height and the possibility to add any new soundscape and stimulus are key features that distinguish the Immersion 360 system from researchbased systems used in preceding studies and makes the system easier to integrate to the clinical practice.

Phase 2: Establishment of Normative Data

With this material in hand, thirty normal-hearing adults and twenty-eight hearing impaired adults were recruited in Canada and in France to assess the psychometrics of the system and specify normative data. Normal-hearing participant’s thresholds had to be better than 20 dB HL at 250 Hz to 8000 Hz. Thresholds had to be worse than 20 dB HL for hearing impaired participants; the mean pure-tone average for this group was 55 dB HL. External and middle ears had to be free of any pathology as evidenced by otoscopic observation and tympanometry testing. All participants were native French speakers. Localisation capacities were assessed with the normal-hearing group. Three seconds truck low-passed (under 2kHz) and car high-passed (over 2kHz) horn signals were used as stimuli. These signals were designed to separately address the use of interaural time difference (ITD) or interaural level difference (ILD) in the identification of the signal source direction. As normal hearing subjects were assessed using such dichotomic signals, jittering was not used to control the use of level cues. Each stimulus was presented three times randomly from each source, for a total of 96 trials.

Sentence recognition was assessed with both groups. FrBio sentences (18), the international French adaptation of AzBio test, were presented in quiet and in the nine virtual environments. Speech and noise levels were set at the actual levels measured with a sound level meter on the recording sites. Two FrBio lists of 20 sentences were administered in each environment; the score was determined by the percentage of correctly identified words. Presentation order of environment and FrBio lists were randomly chosen. In order to calculate the test-retest reliability, all tests were repeated one month later with half of the cohort. All participants spontaneously emphasized the realism of the simulated environments. With eyes closed many had the feeling of being there. Averages, standard deviations, and 95% confidence intervals were computed for each test realised in the Immersion 360 system. These metrics served to define the normative values for the tests. An analysis of fidelity was used to verify the test-retest reliability for each test of the system and to determine the standard error.

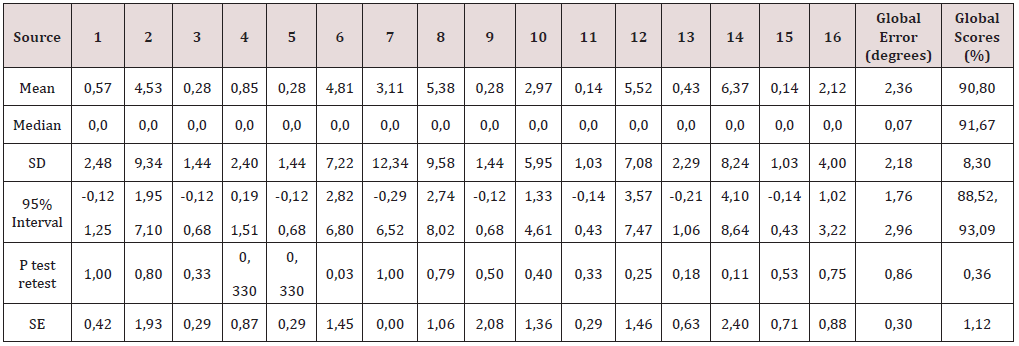

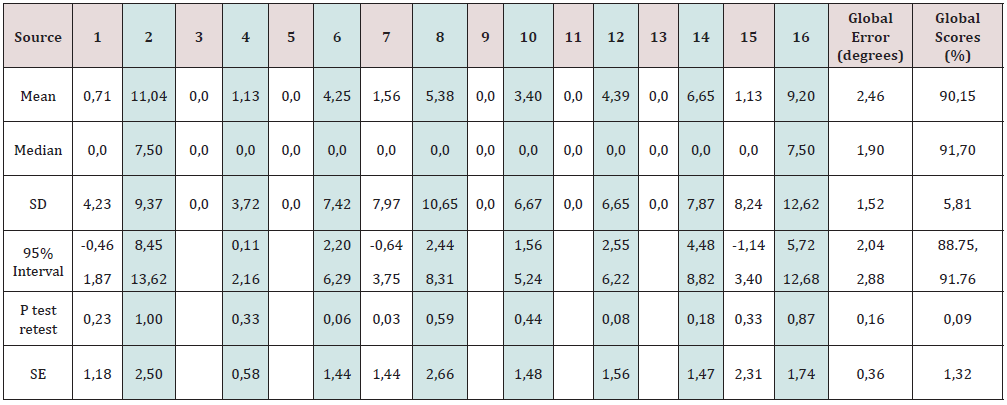

Table 1A: Psychometrics of the localisation error (in degrees) for each speaker, of the global error and of the overall score for the car horn signal (virtual sources are shaded).

Table 1B: Psychometrics of the localisation error (in degrees) for each speaker, of the global error and of the overall score for the truck horn signal (virtual sources are shaded).

In a pilot study with a prototype version of the system, the averages and standard deviations of the speech recognition score in all nine environments were analysed on the perspective of a progressive number of normal-hearing participants, that is 10, 20, 30, 40 and 50 participants. This analysis showed that the variability of these psychometrics remained under the standard error in all tests when 40 to 50 participants were included, in 91% of the tests with 30 participants, but fall to 76% of the tests when considering 20 and 10 participants. Stabilization of performance was therefore observed from 30 participants. This confirms that the sample used in the present study is sufficient to establish a normative reference. Tables 1(a & b) present the psychometrics for the two stimuli used for localisation assessment. The results show that the mean localisation error is low on all sources, that is 2.36 degrees (mean score computed from the performance of all participants). Virtual sources tend to induce larger errors than point sources. However, the mean error on each source remains below the resolution of the system, that is 22.5 degrees. Moreover, the median error is 0° on almost every point or virtual source, confirming that the localisation responses are usually accurate. The 95% confidence interval is also lower than the resolution of the system. Reliability measures confirm that there is no difference in performance at the second test, with only one exception (car horn stimulus on the virtual source 6). The standard error remains under 3°for all sources; the overall standard error is 0.30°. Thus, from a normative point of view, any localisation error of at least one speaker can be considered significant. Finally, the overall average score, that is the mean localisation performance for all trials on all speakers for each participant is 90.8% with a standard error of 1.12%. The lower limit of the confidence interval is 88.5%, delimiting the lower bounds of normality.

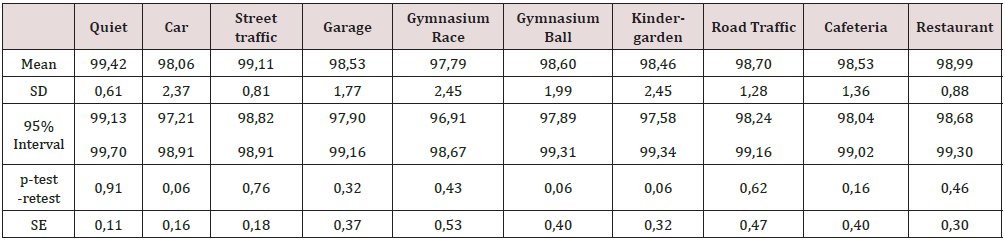

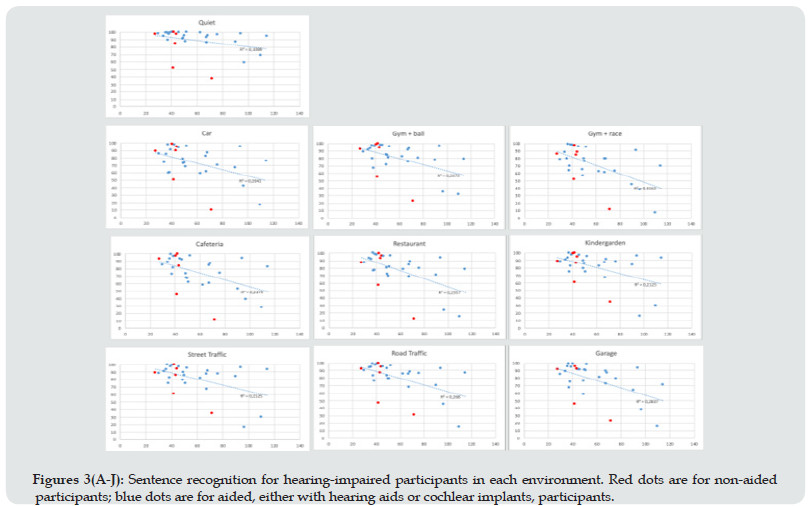

Table 2 shows that the average performance for sentence recognition with the FrBio for normal-hearing participants in each environment lies between 97 and 100% with a very low standard deviation. Test-retest showed no significant difference in performance; the maximum standard error is 1%. Figures 3 a-j present performances for hearing-impaired participants in each environment. Red dots are for non-aided participants; blue dots are for aided, either with hearing aids or cochlear implants, participants. In all situations a significant correlation is observed between scores and pure tone averages, i.e., the more severe the hearing loss, the more difficult it is to understand sentences in daily environments. This expected relation supports the discriminant validity of the test. This data also shows the potential of the Immersion system to emphasize the help one can get from a hearing device: while some severely impaired individuals performed quite well in any environment, others performed poorly even when aided. The immersive situation can thus be quite helpful to review device fittings in an everyday context. Overall, data from hearingimpaired participants confirm the test reliability as no significant difference in performance is also observed with this population. The standard error is slightly larger with a maximum of 6%. This first study confirms that the Immersion 360 system can virtually reproduce everyday soundscapes and thus offer realistic testing conditions to assess auditory capacities, such as speech perception and localization. When applied to a cohort of normal hearing participants, the Immersion 360 system rendered psychometrics for sentence recognition in the expected range for this population, that is between 97 and 100%. This psychometrics can be used as a normative reference for these tests. Complementary data from a cohort of hearing-impaired participants evidenced a slightly larger standard error of 7%, which is in line with the generally accepted 10 to 15% clinically significant difference for speech tests in hearing impaired populations.

Table 2: Average performance for sentence recognition with the FrBio for normal-hearing participants in each environment.

Figure 3: Sentence recognition for hearing-impaired participants in each environment. Red dots are for non-aided participants; blue dots are for aided, either with hearing aids or cochlear implants, participants.

The larger localisation error observed for virtual sources could be related to some sort of blurring of ITD information as two physical sources are activated to produce the virtual signal. However, even if the virtual sources generated more uncertainty, the localisation error remained very low. The near-perfect performance observed in speech perception in all environments could be anticipated. Indeed, as reflected by the signal-to-noise ratios measured during the recording sessions of each environment, communication partners are always trying to obtain an optimal speech intelligibility regardless of the environmental noise by keeping a positive signalto- noise ratio. This observation questions the relevance of assessing speech recognition at very low signal-to-noise ratios (0 dB and less) such as frequently done in audiological clinics and in research.

Phase 3: Validation

Two experimental studies were conducted to validate the use of immersive auditory environments in hearing care for assessment of outcomes and for auditory training. The first study aimed to determine the efficacy of two SNR improving technologies available for unilateral cochlear implant recipients under a realistic test condition. Indeed, newer hearing technologies allow access to several sound cleaning and SNR improving features designed to enhance speech understanding and improve listening comfort in challenging environments. It is important that these features be programmed appropriately, and their effectiveness be assessed not only in controlled laboratory settings but also in the challenging environments they are designed to address, that is the real-life situations. The two SNR improving technologies assessed were:

a) a CROS device (Naida Link CROS) worn on the contralateral, non-implanted ear and

b) an adaptive directional microphone (ADM) called Ultra Zoom available on the Naida Link CROS and the Naida CI Q sound processor.

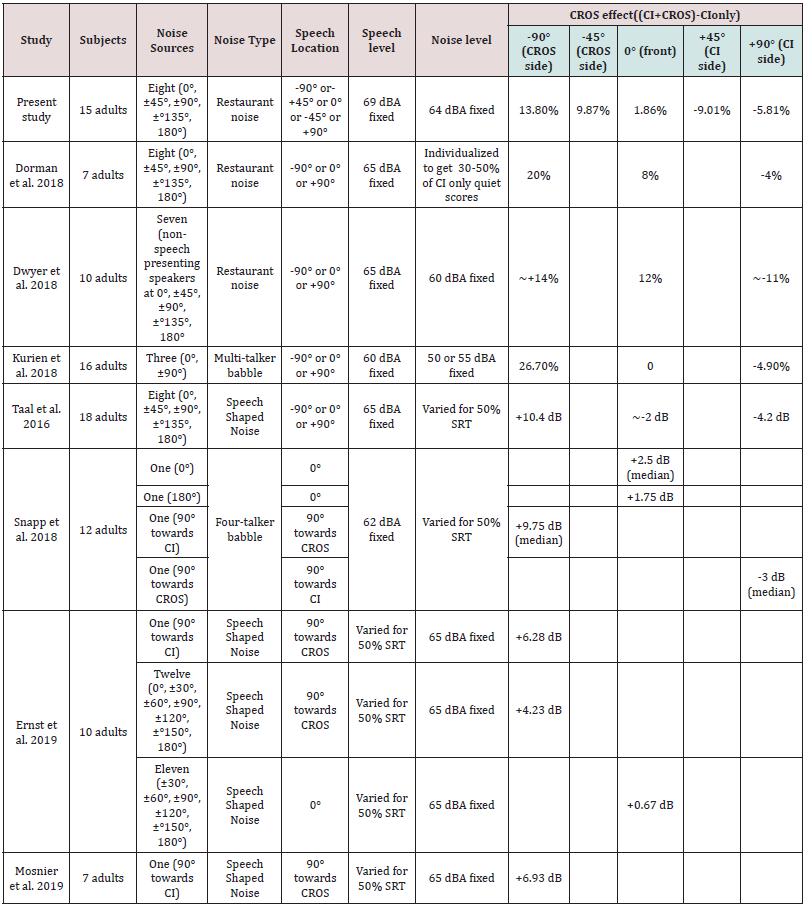

Evidence of benefits of CROS without or with Ultrazoom activated has been shown in several recent studies in unilaterally implanted users. Some studies have utilized two to four speaker setups that may not entirely recreate real life listening challenges [19-21]. Others have assessed the benefit of these technologies in more complex laboratory setups comprising of eight or more noise sources presenting speech shaped noise [22,23] or restaurant noise [24,25]. The primary objective of the present study was to assess the following with the Immersion 360 system: (a) the impact of noise on speech understanding when listening with a unilateral CI only with the ADM off (CI only), (b) the impact of using a CROS device in the contralateral ear with the ADM off on both devices (CI+CROS) and (c) the impact of listening with the ADM activated on one or devices (CI onlyADM, (CI+CROS)ADM). The secondary objective was to compare these measurements with those from other studies.

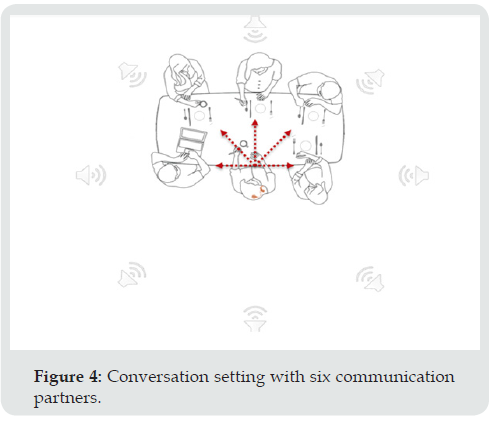

Fifteen adults (6 males, 9 females, median age 63.3 years with a range of 23 to 86 years) with a unilateral Advanced Bionics CI (CII or later) were tested using a dedicated Naida CI Q90 and Naida Link CROS. The Immersion 360 set-up simulated a conversation setting with six communication partners in a moderately noisy restaurant (Figure 4). Target stimuli comprised of FrBio sentences presented randomly from one of five speakers located at 0˚ or ±45° or ±90°. The study participant represented the 6th communication partner at this simulated outing at a restaurant. Noise was presented on 360° from all 8 speakers located 45° apart. Presentation levels and SNR were those measured in a real restaurant at the recording session, i.e., speech at 69 dBA and noise at 64 dB A (+5 dB). The order of test conditions (device configuration, ADM state, noise or quiet) was randomly varied across the subjects. Statistical analyses were conducted using Analysis of Variance (ANOVA) and Repeated Measures ANOVA (RMANOVA). Posthoc analyses were conducted using paired sample t-Tests.

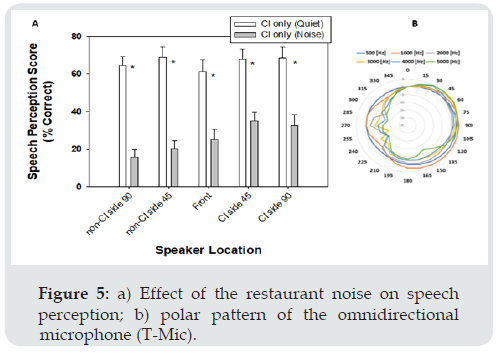

Figure 5A shows the effect of the restaurant noise on speech perception for unilaterally implanted listeners. In quiet, individual scores ranged from 22.43% to 100% reflecting the varied baseline speech understanding levels in the study participants. Mean scores were equivalent across the five target speaker locations (ANOVA, p=.84), indicating similar speech understanding with all simulated communication partners around the table. In noise, speech understanding was significantly lowered with every simulated communication partner (RMANOVA and posthoc paired sample t-Tests, p<.0001). Mean reduction in scores ranged from 33.01% when speech was located on the CI side to 48.77% when speech was located on the non-CI side. The distribution of performance across the five target speakers in noise is consistent with the polar pattern of the omnidirectional microphone (T-Mic) used in this test condition (Figure 5B).

Figure 5: a) Effect of the restaurant noise on speech perception; b) polar pattern of the omnidirectional microphone (T-Mic).

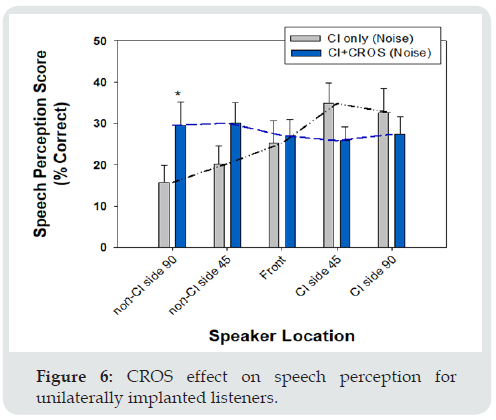

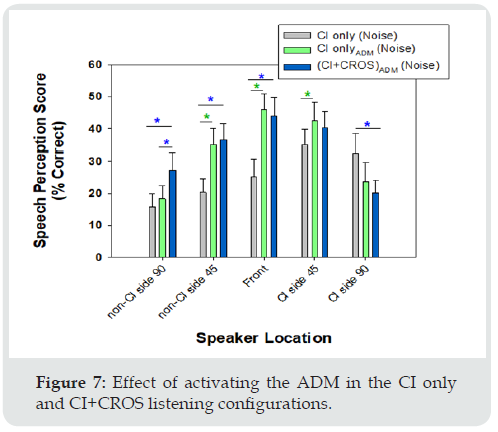

Figure 6 shows the ‘CROS effect’. An equalization of performance between the non-implant and the implant side is observed with the use of CROS in noise (ANOVA, p=0.96). Scores significantly improved as compared to CI only by +13.8% for the 90° speaker on the CROS side (p=.0014). The change in scores at the remaining locations [+9.87% at 45° towards CROS (p=.06); +1.86% at 0° (p=.54); -9.01% at 45° towards CI (p=.056); -5.18% at 90° towards CI (p=.262)] was not significant statistically. The result was a balance of the soundscape across the two sides of the head as also demonstrated by prior studies. Each communication partner is now perceived equally. Figure 7 shows the effect of activating the ADM in the CI only and CI+CROS listening configurations. Speech understanding in noise was significantly improved in the CI onlyADM listening configuration as compared to CI only (RMANOVA, p=0.023). Scores varied significantly as a function of location of the target speaker/ communication partner (ANOVA, p=.0009); speech understanding was significantly improved for speakers located at 45° towards the non-CI ear (p=.0018), in front (p=.0000) and 45° towards the CI side (p=.026). That is, speech understanding was enhanced in noise for communication partners located within ±45° and was statistically equivalent for speakers at ±90°.

A similar trend was observed in the (CI+CROS)ADM condition over CI only (RMANOVA, p=.015) with a significant effect of speaker location (ANOVA, p=.0103). Scores were significantly better for speakers located between 0° and 45° towards the CROS side, equivalent for the speaker at 45° towards the CI and worse for the speaker at 90° towards the CI. Outcomes with CI onlyADM and (CI+CROS)ADM were equivalent, overall (RMANOVA, p=.87). One difference was improved scores for the 90° speaker towards the CROS with (CI+CROS)ADM than CI onlyADM. (p=.024). The outcomes of this study with Immersion 360 are aligned with those from other CROS studies conducted with unilateral CI subjects over the last five years (Table 3). Thus, this first study confirms that assessment in an immersive environment configured for a clinical setting can validate the positive impact of use of CROS and beamforming in a realistic challenging situation for unilateral cochlear implant users. While the CROS feature equalizes the soundscape between the implanted and the non-implanted ear, the Ultrazoom feature enhances the perception of the front soundscape. Synergy of both features results in improved speech perception in real-life conversation situations, such as the restaurant environment explored in this study.

The second study explored environmental sounds perception in CI users. While research on CIs has traditionally been focused on speech perception, few studies have examined everyday sounds perception skills in CI users [26-29]. Yet, these studies have highlighted important difficulties in recognizing or identifying non-linguistic everyday sounds. However, many CI clinicians affirm there is no need to train sound perception as these skills emerge spontaneously through daily experience with environmental sounds. To better understand why clinical observations, diverge from scientific findings, this second study aimed to compare non linguistic environmental sounds perception skills in post-lingually deafened CI adults in two contexts: a standard laboratory setting (scientific data) and a virtual space reproducing daily sound scenes (real-life observations). Participants formed an experimental group of 6 CI adult users (5 males, 1 female, mean age 73 years with a range of 68 to 81 years, mean CI use of 5 years with a range of 0,6 to 13 years) and a control group of 20 hearing adults (10 males, 10 females, mean age 56 years with a range of 48 to 65 years).

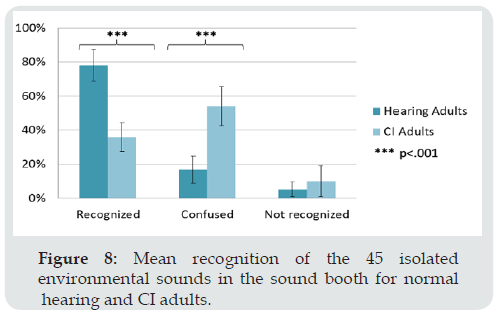

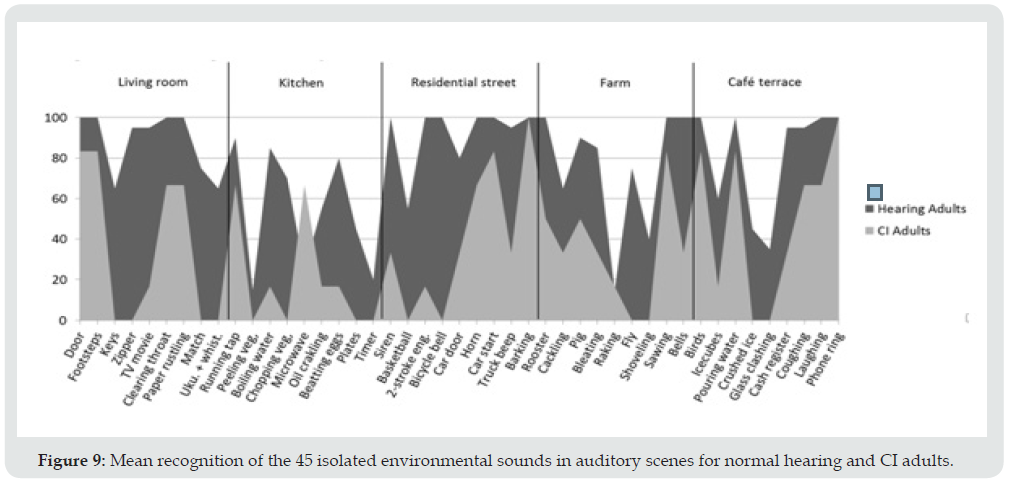

The relationship between isolated sound recognition skills and daily sound scene description capabilities was investigated using a cross-sectional design. Two auditory tasks were conducted in an open-set format. The first was recognition of 45 isolated environmental sounds presented in an audiometric booth. The second was recognition of the same sounds equally distributed in 5 familiar sound scenes presented in a virtual sound space via the Immersion 360 system. The context of each scene was provided beforehand to the participant. The mean recognition of the 45 isolated environmental sounds in the sound booth was significantly reduced in CI adults (36% correct; M=16.17, SD=5.0) compared with normal hearing (NH) adults (78% correct; M=35.10, SD=4.28) (Mann-Whitney, p<.001) (Figure 8). While 17/45 sounds were systematically recognized by all NH subjects, only 2/45 (telephone ringing and dog barking) were recognized by all CI participants. Conversely, 14 sounds were never recognized by CI users (0 for NH adults). In auditory scenes, CI users recognized on average 34% of the same 45 sounds emerging from the environmental background (M=15,3, SD=7,45) while normal-hearing participants recognized 91% (M=41, SD=2,43) of the sounds (Figure 9). A high correlation between recognition capacities in isolated and in scene was found (rs=.96 ; p=.003).

Figure 8: Mean recognition of the 45 isolated environmental sounds in the sound booth for normal hearing and CI adults.

Figure 9: Mean recognition of the 45 isolated environmental sounds in auditory scenes for normal hearing and CI adults.

Thus, in a laboratory setting, recognition of isolated environmental sounds is significantly reduced in CI recipients as compared to normal-hearing peers. Those results are consistent with previous studies [26,28,29]. In an immersive environment reproducing daily sound experience, similar outcomes are observed. Environmental context appears to be helpful only for normal hearing adults where performance is improved to almost a perfect score. This observation emphasizes the importance of auditory scenes in the recognition of environmental sounds. It also shows that the sound cues from the environment are not much accessible / helpful to cochlear implant users to relieve (some) disability from real life. It finally supports the relevance to assess, and train, hearing perception in an immersive environment as laboratory settings does not allow an ecological perspective of hearing care.

Conclusion

Our research program confirms that immersion in an acoustical virtual reality environment can offer a unique opportunity to address hearing care under an ecological model. VR systems can be designed from a clinical perspective and then support the assessment and the technological / therapeutic rehabilitation of persons with hearing impairment. The concept can potentially be put further by integrating visual information in the immersive environments and thus closing the gap with everyday communication situations experienced by hearing-impaired people.

References

- Nilsson M, Soli SD, Sullivan JA (1994) Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. The Journal of the Acoustical Society of America 95(2): 1085-1099.

- Fougeyrollas P, Boucher N, Edwards G, Grenier Y, Noreau L (2019) The Disability Creation Process Model: A Comprehensive Explanation of Disabling Situations as a Guide to Developing Policy and Service Programs. Scandinavian Journal of Disability Research 21: 25–37.

- Rubio Tamayo JL, Barrio MG, García FG (2017) Immersive Environments and Virtual Reality: Systematic Review and Advances in Communication, Interaction and Simulation. Multimodal Technologies and Interact 1: 21.

- Dalgarno B, Lee MJ (2010) What are the learning affordances of 3-D virtual environments?British Journal of Educational Technology 41: 10-32.

- Revit LJ, Killion MC, Conley CL (2007) Developing and Testing a Laboratory Sound System That Yields Accurate Real-World Results. Hearing Review 14(11): 54.

- Revit LJ, Schulein RB, Julstrom S (2002) Toward accurate assessment of real-world hearing aid benefit. Hearing Review 9 (3438): 51.

- Compton Conley CL, Neuman AC, Killion MC, Levitt H (2004) Performance of directional microphones for hearing aids: real-world versus simulation. J Am Acad Audiol 15: 440–455.

- Weller T, Best V, Buchholz JM, Young T (2016) A Method for Assessing Auditory Spatial Analysis in Reverberant Multitalker Environments. J Am Acad Audiol 27: 601–611.

- Lau ST, Pichora Fuller MK, Li KZH, Singh G, Campos JL (2016) Effects of Hearing Loss on Dual-Task Performance in an Audiovisual Virtual Reality Simulation of Listening While Walking. J Am Acad Audiol 27: 567–587.

- Grimm G, Kollmeier B, Hohmann V (2016) Spatial Acoustic Scenarios in Multichannel Loudspeaker Systems for Hearing Aid Evaluation. J Am Acad Audiol 27: 557–566.

- Berry A, Gauthier PA, Hugues Nélisse H, Sgard F (2016) Reproduction d'environnements sonores industriels en vue d’applications aux études d’audibilité des alarmes et autres signaux : preuve de concept.

- Best V, Keidser G, Freeston K, Buchholz JM (2016) A Dynamic Speech Comprehension Test for Assessing Real-World Listening Ability. J Am Acad Audiol 27: 515–526.

- Oreinos C, Buchholz JM (2016) Evaluation of Loudspeaker-Based Virtual Sound Environments for Testing Directional Hearing Aids. Journal of the American Academy of Audiology 27(7): 541-556.

- Bergeron F, Fecteau S, Cloutier D, Hotton M, Bouchard B (2012) Assessment of speech perception in a virtual soundscape. Adult Hearing Screening conference, Como Lake.

- Smeds K, Wolters F, Rung M (2015) Estimation of signal-to-noise ratios in realistic sound scenarios. Journal of the American Academy of Audiology 26(2): 183-196.

- Wolter, F, Smeds K, Schmid, E, Christensen EK, Norup V (2016) Common Sound Scenarios: A Context-Driven Categorization of Everyday Sound Environments for Application in Hearing-Device Research. J Am Acad Audiol 27: 527–540.

- Dillon H, Birtles G, Lovegrove R (1999) Measuring the outcomes of a national rehabilitation program: normative data for the Client Oriented Scale of Improvement (COSI) and the Hearing Aid User’s Questionnaire (HUAQ). J Am Acad Audiol 10: 67–79.

- Bergeron F, Berland A, Fitzpatrick EM, Vincent C, Giasson A, et al. (2019) Development and validation of the FrBio, an international French adaptation of the AzBio sentence lists. International Journal of Audiology 58: 510-515.

- Kurien G, Hwang E, Smilsky K, Smith L, Lin V, et al. (2019) The Benefit of a Wireless Contralateral Routing of Signals (CROS) Microphone in Unilateral Cochlear Implant Recipients. Otology & neurotology : official publication of the American Otological Society, American Neurotology Society and European Academy of Otology and Neurotology 40(2): e82–e88.

- Mosnier I, Lahlou G, Flament J, Mathias N, Ferrary E, et al. (2019) Benefits of a contralateral routing of signal device for unilateral Naída CI cochlear implant recipients. European archives of oto-rhino-laryngology : official journal of the European Federation of Oto-Rhino-Laryngological Societies (EUFOS) : affiliated with the German Society for Oto-Rhino-Laryngology - Head and Neck Surgery 276(8): 2205–2213.

- Snapp HA, Hoffer ME, Spahr A, Rajguru S (2019) Application of Wireless Contralateral Routing of Signal Technology in Unilateral Cochlear Implant Users with Bilateral Profound Hearing Loss. Journal of the American Academy of Audiology 30(7): 579–589.

- Taal CH, van Barneveld DC, Soede W, Briaire JJ, Frijns JH (2016) Benefit of contralateral routing of signals for unilateral cochlear implant users. The Journal of the Acoustical Society of America 140(1): 393.

- Ernst A, Baumgaertel RM, Diez A, Battmer RD (2019) Evaluation of a wireless contralateral routing of signal (CROS) device with the Advanced Bionics Naída CI Q90 sound processor. Cochlear implants international 20(4): 182–189.

- Dorman MF, Cook Natale S, Agrawal S (2018) The Value of Unilateral CIs, CI-CROS and Bilateral CIs, with and without Beamformer Microphones, for Speech Understanding in a Simulation of a Restaurant Environment. Audiology & neuro-otology 23(5): 270–276.

- Dwyer RT, Kessler D, Butera IM, Gifford RH (2019) Contralateral Routing of Signal Yields Significant Speech in Noise Benefit for Unilateral Cochlear Implant Recipients. Journal of the American Academy of Audiology 30(3): 235–242.

- Inverso Y, Limb CJ (2010) Cochlear implant-mediated perception of nonlinguistic sounds. Ear and Hearing 31: 505–514.

- Looi V, Arnephy J (2010) Environmental Sound Perception of Cochlear Implant Users. Cochlear Implants Int 11: 203–227.

- Shafiro V, Gygi B, Cheng MY, Vachhani J, Mulvey M (2009) Factors in the Perception of Environmental Sounds by Patients with Cochlear Implants.

- Shafiro V, Gygi B, Cheng MY, Vachhani J, Mulvey M (2011) Perception of environmental sounds by experienced cochlear implant patients. Ear and Hearing 32: 511–523.

Top Editors

-

Mark E Smith

Bio chemistry

University of Texas Medical Branch, USA -

Lawrence A Presley

Department of Criminal Justice

Liberty University, USA -

Thomas W Miller

Department of Psychiatry

University of Kentucky, USA -

Gjumrakch Aliev

Department of Medicine

Gally International Biomedical Research & Consulting LLC, USA -

Christopher Bryant

Department of Urbanisation and Agricultural

Montreal university, USA -

Robert William Frare

Oral & Maxillofacial Pathology

New York University, USA -

Rudolph Modesto Navari

Gastroenterology and Hepatology

University of Alabama, UK -

Andrew Hague

Department of Medicine

Universities of Bradford, UK -

George Gregory Buttigieg

Maltese College of Obstetrics and Gynaecology, Europe -

Chen-Hsiung Yeh

Oncology

Circulogene Theranostics, England -

.png)

Emilio Bucio-Carrillo

Radiation Chemistry

National University of Mexico, USA -

.jpg)

Casey J Grenier

Analytical Chemistry

Wentworth Institute of Technology, USA -

Hany Atalah

Minimally Invasive Surgery

Mercer University school of Medicine, USA -

Abu-Hussein Muhamad

Pediatric Dentistry

University of Athens , Greece

The annual scholar awards from Lupine Publishers honor a selected number Read More...