Lupine Publishers Group

Lupine Publishers

Menu

ISSN: 2643-6744

Review articleOpen Access

A Benchmark Model for Language Models Towards Increased Transparency Volume 2 - Issue 3

Ayse Arslan*

- Department of Computer Science, Oxford Alumni, of Northern California

Received: November 30, 2022; Published: December 12, 2022

*Corresponding author:Ayse Arslan, Department of Computer Science, Oxford Alumni of Northern California, Oxford Alumni, of Northern California

DOI: 10.32474/CTCSA.2022.02.000139

Abstract

One of the mostly advanced AI technologies in recent year has been Language Models (LM) which necessitates a comparison or benchmark among many LM to enhance transparency of these models. The purpose of this study is to provide a fuller characterization of LMs rather than to focus on a specific aspect in order to increase societal impact. After a brief overview of the constituents of a benchmark and features of transparency, this study explores main aspects of a model - scenario, adaptation, metric- required to provide a roadmap for how to evaluate language models. Given the lack of studies in the field it is a step towards the design of more sophisticated models and aims to raise awareness of the importance of developing benchmarks for AI models

Introduction

The original promise of computing was to solve information overload in science. In his 1945 essay “As We May Think”, Vannevar Bush (1945) proposed computers as a solution to manage the growing mountain of information. Licklider (1960) expanded on this with the vision of a symbiotic relationship between humanbeings and machines so that computers would be “preparing the way for insights and decisions in scientific thinking” (Licklider, 1960). Within this spirit, in the past couple of years, LMs have continued to push the limits of what is possible with deep neural networks. However, when it comes to topics such as understanding, reasoning, planning, and common sense, scientists are divided about how to assess LMs [1].

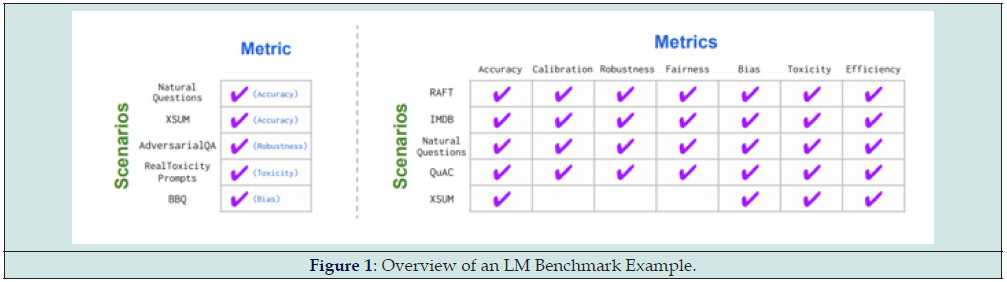

At its core, a LM is a box that takes in text and generates text (Figure 1). LMs are general purposes text interfaces that could be applied across a vast expanse of scenarios. For each scenario, there may be a broad set of desiderata such as accuracy, fairness, efficiency etc. among many others. This rapid proliferation of LMs necessitates a comparison or benchmark among many language models. Benchmarks encode values and priorities (Ethayarajh and Jurafsky, 2020; Birhane et al., 2022) that specify directions for the AI community to be improved upon (Spärck Jones and Galliers, 1995; Spärck Jones, 2005; Kiela et al., 2021; Bowman and Dahl, 2021; Raji et al., 2021) [2].

Overview of Benchmarks

Benchmarks are one of the thorniest problems of AI research. On the one hand, researchers need a way to evaluate and compare their models. On the other hand, some concepts are really hard to measure. One of the major problems underpinning benchmarks is that we usually view them from a human intelligence perspective. As a simplified example, we consider chess as a complicated intelligence challenge because, on their way to mastering chess, human beings must acquire a set of cognitive skills through hard work and talent. Yet, from a computational perspective, there can be a shortcut for finding good chess moves through a good algorithm, and the right inductive biases. As this example demonstrates, even some of the most carefully crafted benchmarks can be prone to computational shortcuts. In other words, while benchmarks are a good tool to compare machine learning models against one another, they are not anthropomorphic measures of cognitive skills in machines [3]. When implemented and interpreted appropriately, benchmarks enable the broader community to better understand AI technology and influence its trajectory. In general, a benchmark involves three elements (Figure 1).

a) Broad C overage and Recognition of Incompleteness: As it is not possible to consider all the scenarios and the desiderata that (could) pertain to LMs, a benchmark should provide a topdown taxonomy and make explicit all the major scenarios and metrics that are missing.

b) Multi-metric Measurement: Societally beneficial systems reflect many values, not just accuracy. A benchmark should represent these plural desiderata, evaluating every desideratum for each scenario considered.

c) Standardization: As the object of evaluation is the LM, not a scenario-specific system, the strategy for adapting an LM to a scenario should be controlled for. Overall, a benchmark builds transparency by assessing LMs in their totality. Rather than focusing on a specific aspect, the aim is to strive for a fuller characterization of LMs to improve scientific understanding and increase societal impact.

A benchmark of LM has two levels:

a) an abstract taxonomy of scenarios and metrics to define the design space for LM evaluation and

b) a concrete set of implemented scenarios and metrics that were selected to prioritize coverage (e.g. different English varieties), value (e.g. user-facing applications), and feasibility (e.g. limited engineering resources).

When doing a benchmark some key considerations should be taken into account. To begin with, while standardizing a model evaluation, in particular by evaluating all models for the same scenarios, same metrics, models themselves may be more suitable for particular scenarios, particular metrics, and particular prompts/ adaptation methods [4-5].

Moreover, while the evaluation itself may be standardized, the computational resources required to train these models may be very different (e.g., resource-intensive models generally fare better in our evaluation).

Furthermore, models may also differ significantly in their exposure to the particular data distribution or evaluation instances in use, with the potential for train-test contamination. Even for the same scenario, the adaptation method that maximizes accuracy can differ across models which poses a fundamental challenge for what it means to standardize LM evaluation in a fair way across models. Given the myriad scenarios where LMs could provide value, it would be appealing for many reasons if upstream perplexity on LM objectives reliably predicted downstream accuracy. Unfortunately, when making these comparisons across model families, even when using bits-per-byte (BPB)- which could provide more comparison than perplexity-, this type of prediction might not always work well [6].

Overview of LMs

LMs are a sub-category of NLP (neural language programming) within the field of AI. As in any other field of AI, the challenge of transparency in AI models and datasets continues to receive increasing attention from academia and industry. When it comes to developing an AI model, producers are upstream creators of dataset and documentation, responsible for dataset collection, ownership, launch and maintenance [7-10]. Agents are stakeholders who read transparency reports and possess the agency to use or determine how themselves or others might use the described datasets or AI systems. Agents are distinct from Users, who are individuals and representatives who interact with products that rely on models trained on dataset. Users may consent to providing their data as a part of the product experience and require a significantly different set of explanations and controls grounded within product experiences. Data set design also plays a crucial role for LM development. All data is processed in a common markdown format to blend knowledge between sources. For the interface, one can use task-specific tokens to support different types of knowledge. Uncurated data also means more tokens with limited transfer value for the target use-case; wasting compute budget.

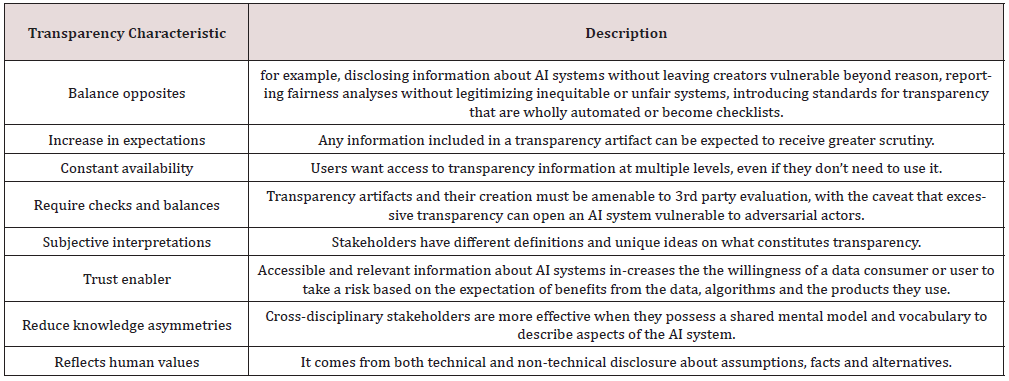

Transparency refers to a clear, easily understandable, and plain language explanation of what something is, what it does and why it does that. The following Table 1 includes core aspects of transparency. Yet, attempts to introduce standardized and sustainable mechanisms for transparency is hindered by real world constraints of the diversity of goals, workflows, and backgrounds of individual stakeholders participating in the life cycles of datasets and AI systems. In order to increase the transparency of NLP s, it might be useful to gain an understanding of the different tasks that they accomplish. To begin with, Question answering (QA) is a fundamental task in NLP that underpins many real-world applications including web search, chatbots, and personal assistants. QA is very broad in terms of the questions that can be asked and the skills that are required to arrive at the answer, covering general language understanding, integration of knowledge, and reasoning [11]. Information retrieval (IR) refers to the class of tasks concerned with searching large unstructured collections (often text collections), is central to numerous userfacing applications. IR has a long tradition of study (Salton and Lesk, 1965; Salton, 1971; Spärck Jones, 1972; Salton and McGill, 1983; Manning et al., 2008; Lin et al., 2021a) and is one of the most widely deployed language technologies. Text summarization is an established research direction in NLP (Luhn, 1958; Mani, 1999; Spärck Jones, 1999; Nenkova and McKeown, 2012), with growing practical importance given the ever-increasing volume of text that would benefit from summarization. One can formulate text summarization as an unstructured sequence-to-sequence problem, where a document (e.g. a CNN news article) is the input and the LM is tasked with generating a summary that resembles the reference summary (e.g. the bullet point summary provided by CNN with their article). To evaluate model performance, the model-generated summary is compared against a human-authored reference summary using automated metrics for overall quality (Lin, 2004; Zhang et al., 2020b), faithfulness (Laban et al., 2022; Fabbri et al., 2022), and extractiveness (Grusky et al., 2018). Extractiveness refers to the extent to which model summaries involve copying from the input document. Consequently it is important to measure and improve the faithfulness of these systems since unfaithful systems may be harmful by potentially spreading misinformation, including dangerous, yet hard to detect errors, when deployed in real-world settings [12-15]. Sentiment analysis has blossomed into its own subarea in the field with many works broadening and deepening the study of sentiment from its initial binary text-classification framing (Wiebe et al., 2005; McAuley et al., 2012; Socher et al., 2013; Nakov et al., 2016; Potts et al., 2021).

Text classification has a long history in NLP (see Yang and Pedersen, 1997; Yang, 1999; Joachims, 1998; Aggarwal and Zhai, 2012) with tasks such as language identification, sentiment analysis, topic classification, and toxicity detection being some of the most prominent tasks within this family. Focusing on fairness of models is essential to ensuring technology plays a positive role in social change (Friedman and Nissenbaum, 1996; Abebe et al., 2020; Bommasani et al., 2021). Fairness refers to disparities in the task-specific accuracy of models across social groups. One way to operationalize fairness is by means of counterfactual fairness (Dwork et al., 2012; Kusner et al., 2017) which refers to model behavior on counterfactual data that is generated by perturbing existing test examples (cf. Ma et al., 2021; Qian et al., 2022). In contrast, bias refers to properties of model generations, i.e. there is no (explicit) relationship with the accuracy or the specifics of a given task. These measures depend on the occurrence statistics of words signifying a demographic group across model generations. Toxicity detection (and the related tasks of hate speech and abusive language detection) is the task of identifying when input data contains toxic content, which originated due to the need for content moderation on the Internet (Schmidt and Wiegand, 2017; Rauh et al., 2022). Critiques of the task have noted that (i) the study of toxicity is overly reductive and divorced from use cases (Diaz et al., 2022), (ii) standard datasets often lack sufficient context to make reliable judgments (Pavlopoulos et al., 2020; Hovy and Yang, 2021), and (iii) the construct of toxicity depends on the annotator (Sap et al., 2019a; Gordon et al., 2022). Another crucial concept for ML models is toxicity used as an umbrella term for related concepts like hate speech, violent speech, and abusive language (see Talat et al., 2017).48 To operationalize toxicity measurement, one can use the Perspective API (Lees et al., 2022) 49 to detect toxic content in model generations. Given these features of LM the next section explores a conceptual framework for designing a LM benchmark [16].

Conceptual Model

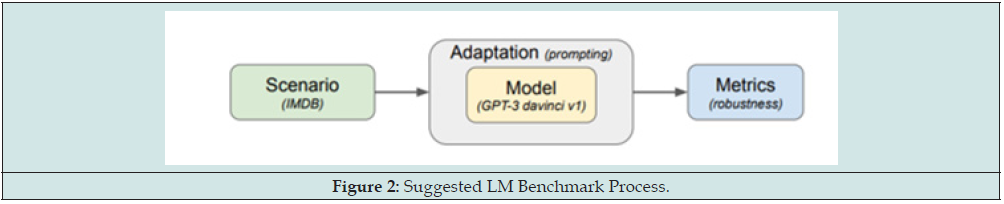

The study suggests to implement the following aspects for designing a LM benchmark (Figure 2):

Taxonomy: One can taxonomize the vast design space of language model evaluation into scenarios and metrics. By stating this taxonomy, one can select systematically from this space, which makes explicit both priorities in benchmark design and the limitations in the benchmark at present.

Broad Coverage: Given the taxonomy, one select and implement core scenarios, for which one can comprehensively measure major metrics (accuracy, calibration, robustness, fairness, bias, toxicity, efficiency).

Evaluation of Existing Models: One can evaluate existing Lms under the standardized conditions of the benchmark, ensuring models can now be directly compared across many scenarios and metrics. These models might vary in terms of their public accessibility: while some of them are open, others are limited access, and a few might even be closed.

Empirical Findings: The extensive evaluation will offer guidance for future language model development and ample opportunities for further analysis.

As seen in Figure 2, the following aspects (scenario, adaptation, metric) are required to evaluate a LM to provide a roadmap for how to evaluate language models:

a) Scenarios: A scenario instantiates a desired use case for a LM. Scenarios are what we want models to do. Each instance consists of (i) an input (a string) and (ii) a list of references. Each reference is a string annotated with properties relevant for evaluation (e.g. is it correct or acceptable?).

b) Adaptation: Adaptation is the procedure that transforms a LM, along with training instances, into a system that can make predictions on new instances. Examples of adaptation procedures include prompting, lightweight-finetuning, and finetuning.

We define a language model to be a black box that takes as input a prompt (string), along with decoding parameters (e.g. temperature). The model outputs a completion (string), along with log probabilities of the prompt and completion. Viewing language models as text-to-text abstractions is important for two reasons:

a) First, while the prototypical LM is usually a dense Transformer trained on raw text, LMs could also use an external document store (Lewis et al., 2020c), issue search queries on the web (Nakano et al., 2021), or be trained on human preferences (Ouyang et al., 2022; Bai et al., 2022). An ideal model should be agnostic with regard to these implementation details.

b) Second, the text-to-text abstraction is a convenient general interface that can capture all the (text-only) tasks of interest, an idea that was pioneered by McCann et al. (2018) and Raffel et al. (2019).

c) Metrics: To determine how well the model performs, one can compute metrics over these completions and probabilities. Metrics concretely operationalize the abstract desiderata required for useful systems [17-18].

To evaluate a LM, a series of runs must be implemented, where each run is defined by a scenario, adaptation method and metric. Each of these scenarios, adaptation, and metrics define a complicated and structured space, which one implicitly navigates to make decisions in evaluating a LM.

Scenarios

One can taxonomize scenarios based on the following:

a) a task (e.g. question answering, summarization), which characterizes what we want a system to do.

b) a domain (e.g. a Wikipedia 2018 dump), which characterizes the type of data we want the system to do well on; and

c) the language or language variety (e.g. English).

Tasks, domains, and languages are not atomic or unambiguous constructs: they can be made coarser and finer. Given this structure, one can deliberately select scenarios based on main overarching principles:

a) coverage of the space,

b) minimality of the set of selected scenarios, and

c) prioritizing scenarios that correspond to user-facing tasks.

Given the ubiquity of natural language, the field of natural language processing (NLP) considers myriad tasks that correspond to language’s many functions (Jurafsky and Martin, 2000). To generate this set, one can take the tracks at a major NLP conference (ACL 2022), and for each track, one can map the associated subarea of NLP to canonical tasks for that track.

Moreover, domains are a familiar construct in NLP, yet their imprecision complicates systematic coverage of domains. One can further decompose domains according to 3 W’s:

a) What (genre): The type of text, which captures subject and register differences. Examples: Wikipedia, social media, news, scientific papers, fiction.

b) When (time period): When the text was created.

c) Examples: 1980s, pre-Internet, present day (e.g. does it cover very recent data?)

d) Who (demographic group): Who generated the data or who the data is about. Examples: Black/White, men/women, children/elderly.

Models

When deployed in practice, models are confronted with the complexities of the open world (e.g. typos) that cause most current systems to significantly degrade (Szegedy et al., 2014; Goodfellow et al., 2015; Jia and Liang, 2017; Belinkov and Bisk, 2018; Madry et al., 2018; Ribeiro et al., 2020; Santurkar et al., 2020; Tsipras, 2021; Dhole et al., 2021; Koh et al., 2021; Yang et al., 2022). One suggestion is to measure the robustness of different models by evaluating them on transformations of an instance [19]. That is, given a set of transformations for a given instance, one can measure the worst-case performance of a model across these transformations. On the one hand, measuring robustness to distribution or subpopulation shift (Oren et al., 2019; Santurkar et al., 2020; Goel et al., 2020; Koh et al., 2021) requires scenarios with special structure (i.e., explicit domain/subpopulation annotations) as well as information about the training data of the models. On the other hand, measuring adversarial robustness (Biggio et al., 2013; Szegedy et al., 2014) requires many adaptive queries to the model in order to approximate worst-case perturbations, which might not always be feasible (Wallace et al., 2019a; Morris et al., 2020). Moreover, the transformation/perturbation-based paradigm has been widely explored to study model robustness (e.g. Ribeiro et al., 2020; Goel et al., 2021; Wang et al., 2021a), in order to understand whether corruptions that arise in real use-cases (e.g. typos) affect the performance of the model significantly. The goal is to understand whether a model is sensitive to perturbations that change the target output and does not latch on irrelevant parts of the instance [20].

Metrics

To taxonomize the space of desiderata, one can begin by enumerating criteria that are necessary for developing useful systems. Yet, what does it mean for a system to be useful? Too often in AI, this has come to mean the system should be accurate in an average sense. While (average) accuracy is an important, and often necessary, property for a system (Raji et al., 2022), accuracy is often not sufficient for a system to be useful/desirable. Unfortunately, while many of the desiderata are well-studied by the NLP community, some are not codified in specific tracks/areas (e.g. uncertainty and calibration). Therefore, it is suggested to expand the scope to all AI conferences, drawing from a list of AI conference deadlines [21].

Recommendations

While reasoning is usually assumed to involve transitions in thought (Harman, 2013), possibly in some non-linguistic format, one typical way to assess reasoning abilities (e.g., in adult humans) is by means of explicit symbolic or linguistic tasks. In order to distinguish reasoning from language and knowledge as much as possible, one can focus on relatively abstract capacities necessary for sophisticated text-based or symbolic reasoning. To measure ampliative reasoning, one can use explicit rule induction and implicit function regression, which corresponds to making and applying claims about the likely causal structure for observations. For rule induction, one can design and implement rule induct inspired by the LIME induction tasks, where we provide two examples generated from the same rule string, and task the model with inferring the underlying rule. For function regression, one can design and implement numeracy prediction, which requires the model to perform symbolic regression given a few examples and apply the number relationship (e.g. linear) to a new input. One can also evaluate language models on more complex and realistic reasoning tasks that require multiple primitive reasoning skills to bridge the gap between understanding reasoning in very controlled and synthetic conditions and the type of reasoning required in practical contexts.

Conclusions

Focusing on evaluation of models is essential to ensure that technology plays a positive role in social change. Within this regard, this study explored the main features of benchmarks - scenario, adaptation, metric- required to provide a roadmap for how to evaluate LMs. It also made recommendations of how to use model and metrics for fairness and transparency when it comes to developing LM. This study conceptualized a benchmark model for evaluating NLP models. Given the lack of studies in the field it is a step towards the design of more sophisticated models and thus, right now, far from perfect. Nevertheless, it aims to raise awareness of the importance of developing benchmarks for AI models.

References

- Allison Koenecke, Andrew Nam, Emily Lake, Joe Nudell, Minnie Quartey, et al. (2020) Racial disparities in automated speech recognition. Proc Natl Acad Sci 117(14): 7684-7689.

- Alexandre Lacoste, Alexandra Luccioni, Victor Schmidt, Thomas Dandres (2019) Quantifying the carbon emissions of machine learning p. 1-8.

- Ankit Kumar, Ozan Irsoy, Peter Ondruska, Mohit Iyyer, James Bradbury, et al. (2016) Ask me anything: Dynamic memory networks for natural language processing. In International conference on machine learning pp. 1378-1387

- Atoosa Kasirzadeh and Iason Gabriel (2022) In conversation with artificial intelligence: Aligning language models with human values pp. 1-30.

- Bernard Koch, Emily Denton, Alex Hanna, and Jacob Gates Foster (2021) Reduced, reused, and recycled: The life of a dataset in machine learning research. In Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track pp. 1-18

- Christopher Potts, and Matei Zaharia (2021) A moderate proposal for radically better AI-powered Web search. Stanford HAI Blog.

- Daniel Khashabi, Yeganeh Kordi, and Hannaneh Hajishirzi (2022) Unifiedqa-v2: Stronger generalization via broader cross-format training p. 1-7.

- Davis D, Seaton D, Hauff C and Houben GJ (2018) Toward large-scale learning design: Categorizing course designs in service of supporting learning outcomes. Proceedings of the Fifth Annual ACM Conference on Learning at Scale p. 1-10.

- Devlin J, Chang MW, Lee K and Toutanova K (2018) Bert: Pre-training of deep bidirectional transformers for language understanding pp. 1-16 .

- Divyansh Kaushik, Eduard Hovy, and Zachary Lipton (2019) Learning the difference that makes a difference with counterfactually-Augmented data. In International Conference on Learning Representations (ICLR) pp. 1-17.

- Fereshte Khani and Percy Liang (2020) Feature noise induces loss discrepancy across groups. In International Conference on Machine Learning (ICML) pp. 1-19.

- González Carvajal S and Garrido-Merchán EC (2020). Comparing BERT against traditional machine learning text classification 1-15.

- Grandini M, Bagli E and Visani G (2020) Metrics for multi-class classification: An overview pp. 1-17.

- Hannah Rose Kirk, Abeba Birhane, Bertie Vidgen, and Leon Derczynski (2022) Handling and presenting harmful text in nlp research. Andy Kirkpatrick. 2020. The Routledge handbook of world Englishes. Routledge.

- Jared Kaplan, Sam McCandlish, T J Henighan, Tom B Brown, Benjamin Chess, et al. (2020) Scaling laws for neural language models p. 1-30.

- Kingma DP and Ba J (2014) Adam: A method for stochastic optimization pp. 1-15.

- Matt J Kusner, Joshua R Loftus, Chris Russell, Ricardo Silva (2017) Counterfactual fairness. In Advances in Neural Information Processing Systems (NeurIPS) p. 1-11.

- Philippe Laban, Tobias Schnabel, Paul N Bennett and Marti A. Hearst (2022) SummaC: Re-Visiting NLI-based Models for Inconsistency Detection in Summarization. Transactions of the Association for Computational Linguistics 10: 163-177.

- Tom Kwiatkowski, Jennimaria Palomaki, Olivia Redfield, Michael Collins, Ankur Parikh (2019)Natural questions: A benchmark for question answering research. In Association for Computational Linguistics (ACL) 7: 453-466.

- Tomš Kočisky, Jonathan Schwarz, Phil Blunsom, Chris Dyer, Karl Moritz Hermann, et al. (2017) The NarrativeQA reading comprehension challenge p. 1-12.

- Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017). Attention is all you need. 31st Conference on Neural information Processing Systems 5998-6008.

Top Editors

-

Mark E Smith

Bio chemistry

University of Texas Medical Branch, USA -

Lawrence A Presley

Department of Criminal Justice

Liberty University, USA -

Thomas W Miller

Department of Psychiatry

University of Kentucky, USA -

Gjumrakch Aliev

Department of Medicine

Gally International Biomedical Research & Consulting LLC, USA -

Christopher Bryant

Department of Urbanisation and Agricultural

Montreal university, USA -

Robert William Frare

Oral & Maxillofacial Pathology

New York University, USA -

Rudolph Modesto Navari

Gastroenterology and Hepatology

University of Alabama, UK -

Andrew Hague

Department of Medicine

Universities of Bradford, UK -

George Gregory Buttigieg

Maltese College of Obstetrics and Gynaecology, Europe -

Chen-Hsiung Yeh

Oncology

Circulogene Theranostics, England -

.png)

Emilio Bucio-Carrillo

Radiation Chemistry

National University of Mexico, USA -

.jpg)

Casey J Grenier

Analytical Chemistry

Wentworth Institute of Technology, USA -

Hany Atalah

Minimally Invasive Surgery

Mercer University school of Medicine, USA -

Abu-Hussein Muhamad

Pediatric Dentistry

University of Athens , Greece

The annual scholar awards from Lupine Publishers honor a selected number Read More...