Lupine Publishers Group

Lupine Publishers

Menu

ISSN: 2637-4668

Research Article(ISSN: 2637-4668)

Applications of ICTs and Action Recognition for Construction Workers Volume 1 - Issue 3

Ren-Jye Dzeng *, HsienHuiHsueh1 and Keisuke Watanabe2- 1Deptartment of Civil Engineering National, Chiao Tung University, Taiwan

- 2Department of Navigation and Ocean Engineering, Tokai University, Japan

Received: February 02, 2018; Published: February 13, 2018

*Corresponding author: Ren-Jye Dzeng, Deptartment of Civil Engineering National, Chiao Tung University, Taiwan

DOI: 10.32474/TCEIA.2018.01.000113

Abstract

Human action recognition has drawn considerable attention because of its wide variety of potential applications in the computer vision sector. The past few decades have witnessed rapid advancements in various approaches such as scene interpretation and human body recognition. These technologies have also been applied in several areas in the construction industry. This paper reviews the research regarding construction management by using information and communication technologies for human action recognition. The technologies, including vision- and non-vision-based approaches, which are used to capture and classify actions, are summarized, and the review also covers the three major application areas: ergonomics, personal safety, and productivity.

Keywords: Recognition; Activity; Behavior; State; Review

Introduction

Human action recognition has gained considerable attention because of its wide variety of potential applications (e.g., entertainment, rehabilitation, robotics, and security) in the computer vision sector. During the last decades, rapid advances have been made, investigating various approaches such as scene interpretation, holistic body-based recognition, body part- based recognition, and action hierarchy-based recognition [1]. Recognizing the activities of workers enables measurement and control of safety, productivity, and quality at construction sites, and automated activity recognition can enhance the efficiency of the measurement system [2]. Novel information and communication technologies (ICTs) have undergone unprecedented advancements in the recent decades and are transforming lives as well as academic research.

This paper reviews the recent research developments and applications regarding action recognition in the construction industry. Because action recognition is employed in several research fields where different terminologies are used, in this paper, the term "action" is used in a broader sense and may refer to "activity," "behavior or state," and "posture," which are all essential for the management of construction jobsites. First, the action of a construction worker may refer to a typical activity performed by a worker, such as formwork assembly or concrete pouring. Moreover, it may refer to more detailed operative steps or sub activities of an activity such as hammering nails and turning a wrench. In the context of productivity, activities may include not only production activity but also supportive activities such as moving or sorting tiles and idling activities such as chatting or smoking.

Second, the action of a construction worker may refer to behavior such as the adoption of a stance (e.g., squatting or bending to lift a load), a physical state (e.g., unsteady movement or sudden stamping), or a mind state (e.g., vigilance or inattention) that a worker intentionally or unintentionally exhibits. For example, in the context of monitoring worker safety at a jobsite, the action may concern whether a worker is taking a normal stance or making a hazardous action such as a sudden stamp. Finally, the action of a worker may refer to posture (e.g., a large angle of bending when carrying heavy loads)when research is concerned with ergonomics and worker health. Recognizing the actions of workers is important for many aspects of construction management such as monitoring personal hazardous behaviors to prevent work-related injuries or musculoskeletal disorders(WMSDs), monitoring work activities to reduce non-value-added activity for improving productivity, and facilitation of visual communication (e.g., hand signals between an equipment operator and on-ground support crew).

Conventionally, the task of action recognitions visually performed by human inspectors or supervisors; moreover, it is a time-consuming and error-prone process because of the subjective nature of human visualization and human memory limitations. ICTs have gained considerable attention in recent years owing to their availability, affordability, diversity, and applicability. The ability to detect human actions is particularly crucial and has received great attention from both practitioners and researchers in several other fields such as ambient intelligence, surveillance, elderly care, human-machine interaction, smart manufacturing, sports training, and postural correction and rehabilitation, and entertainment games [3]. Compared with some environments such as the manufacturing industry where fabrication is mostly based on static stations and assembly processes, the dynamic nature of construction activities, workface, and site environments make the application of ICTs for action recognition more challenging due to problems such as occlusions, different viewpoints, multiple workers working simultaneously, and a large range of movement.

This paper reviews the recent development of human-action- recognition technologies and their applications in the construction industry. Some other technologies (e.g., ultra-wideband, radiofrequency identification, and global positioning systems) that monitor construction activities or workers without involving action recognition are beyond the scope of this review. For example, the research by Cheng et al. [4], which used commercial ultra-wideband technology to monitor resource allocations and productivity at a harsh construction site, is not covered by the scope of this review because the researchers derived information about on-going activities solely based on the locations and movements of each type of resource without actually monitoring individual actions.

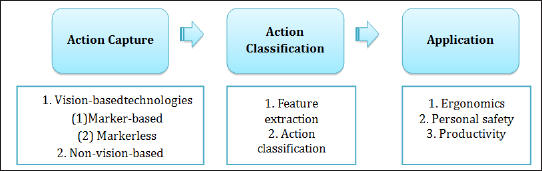

This paper divides the development of an action recognition system into that of three basic modules: action capture action classification, and application modules, as shown in Figure 1. The structure of this review is based on these three modules. First, the section "Action Capture" reviews both vision-based and non-vision- based technologies that have been proposed in the literature. The section "Action Classification" reviews the methodologies used for both feature extraction and classification. Finally, the section "Application" reviews the three major applications: ergonomics, personal safety, and productivity.

Figure 1: Outline of the Review.

Action Capture

The first step in recognizing human action is to capture it, typically as raw data; these data are not understandable by a computer system or human without further analysis. In general, the technologies available for this purpose can be divided into two categories: vision-based and non-vision-based.

Vision-based technologies

Vision-based technologies enable conversion of raw video images of an observed target person into computerized data that can be understood by the system developed for the research purpose but not yet within the domain context. For example, the technologies may allow a system to identify that the target person is raising one hand at a certain angle; however, whether this action is detrimental to the person is unknown until further classification. Vision-based technologies normally involve capturing and processing video images and converting them into a three-dimensional (3D) human skeleton model. These technologies use two approaches: marker-based and markerless approaches. Sharma et al. [5] reviewed several motion capture methodologies, including both marker-based motion capture (e.g., acoustical, mechanical, magnetic, and optical systems) and markerless motion capture (such as video image recognition).

Marker-based motion capture approaches are typically expensive, require complex setups, and also interfere more with workers' activities; however, they offer higher accuracy and avoid occlusion problems in some cases. By contrast, markerless motion capture approaches are typically cheaper, require simple setups, and cause less interference to workers. Optical motion capture systems are a popular marker-based approach in the construction industry. These systems use passive (e.g., retro reflective) or active markers (e.g., light-emitting diodes) attached to a subject's body parts, which is a common technique for collecting kinematic data in biomechanical studies [6]. Commercial systems such as ViconTM and Qualysis™ provide whole-body motion data with a positional accuracy of less than 1 mm [7]. Markerless approaches require multiple cameras or RGB-depth cameras to capture the motion of a target worker and extract 3D skeleton models of the worker for subsequent analysis.

Researchers previously relied on two-dimensional (2D) RGB video images recorded from multiple cameras to measure postural angles of the human body through visual estimation, mathematical calculations, or a flexible 3D mannequin [8]. Although these methods were cost-effective and noninvasive, they were time-consuming and resulted in large errors. Moreover, researchers often needed to create their own algorithms to identify the associated pose and construct the corresponding 3D skeleton model. For example, Han and Lee [9] used stereo cameras to collect motion data to construct a 3D skeleton model for identifying unsafe actionsduringa typical construction task on a ladder. Khosrowpour et al. [10] also used depth images for activity analysis of interior construction operations. Liuet al. [11] used two smartphones as stereo cameras to record motion data and developed their own algorithm for extracting 3D skeleton models from 2D images of target workers. Wireless video cameras and 3D range image cameras were also used by researchers to monitor and detect specific activities [9,12].

With the development of depth camera solutions that are usually equipped with a proprietary algorithm for extracting a 3D skeleton, the construction of a 3D skeleton model of a target worker has become considerably easier. Compared with an RGB image, a depth image contains data of the distance of pixels of 3D object surfaces from the camera. Tracking of the human skeleton is one important feature provided by depth camera solutions. In the vision-based approach, the use of depths cameras to construct a3D skeleton model has proliferated in recent years, especially after Microsoft's introduction of Kinect™ into the gaming market with an attractive and affordable price compared with other depth camera solutions. The KinectTM was originally developed for the Xbox 360 video game console and Windows personal computers to support a natural user interface that allows users to communicate with the system or the game using gestures without wearing any attachments or holding any controllers.

Nevertheless, the limitations of KinectTM, such assist lengthy computation periods, low accuracy, and interference from occlusions and high levels of illumination, mean that KinectTM is more feasible in controlled indoor environments rather than outdoor construction jobsite environments [13,14]. Hanet et al. [1] used depth cameras to classify unsafe behaviors in four actions during ladder climbing (i.e., normal climbing, backward-facing climbing, holding an object when climbing, and reaching far to the side while on the ladder). Marker-based solutions and markerless solutions have their own advantages and disadvantages. Han et al. [1] compared the performance of the Kinect™ system, a markless system, with that of the ViconTM system, a more accurate marker- based model, and found that the mean difference of measured rotation angles was 15.9°, with the error increasing to 49.0° in the case of a right forearm.

In contrast to Vicon™, which tracked markers using six or eight cameras, Kinect™ used only one camera and suffered from errors caused by occlusions. The KinectTMdoes not function correctly in certain lighting conditions (e.g., outdoor environments) and has a limited operating range (e.g., 0.8-4.0m) and tracking accuracy (e.g., compared with ViconTM, KinectTM may not accurately track the locations of body parts when occluded). However, Kinect™ may be more suitable than marker-based technology for field settings because it does not require construction workers to wear any devices. In addition, whether human tracking requires only a qualitative assessment of posture category or a more precise set of quantitative parameters articulating body configuration is critical when selecting an appropriate approach.

Non-vision-based technologies

Non-vision-based technologies enable researchers to identify human actions without visual perception of the target person. Instead of using image sensors, inertial sensors such as accelerometers, gyroscopes, and pressure sensors are employed. The accelerometer, sometimes coupled with gyroscopes, is popular for tracking human actions in the construction industry. Most modern smartphones are equipped with a built-in accelerometer and gyroscope. An accelerometer is a sensor that measures values of acceleration along the x, y, or z-axis. Early studies focused on determining the relationship between accelerometer readings and metabolic activity. For example, Uiterwal et al. [15] reassigned injured workers to less physically demanding tasks to help their rehabilitation, with task suitability defined by the relationship between accelerometer values and metabolic activity. In the last decade, the main focus of researchers in the area of action recognition using accelerometers has been on the number of sensors, positions of sensor, and data analysis techniques used for action recognition.

Action recognition using accelerometers has been revealed to have promising applications in the last decade, especially in health care and sports [16-19]. In the construction industry, Joshua & Varghese [2] developed a methodology with which to evaluate classifiers for recognizing masonry activities based on features generated from the data collected by a single accelerometer attached to the waist of the mason. Their experiment, performed in instructed and uninstructed modes for classifying masonry activities based on the collected accelerometer data, revealed that the most effective classifier was a neural network classifier. Fang and Dzeng [20] developed a novice hierarchical algorithm to identify hazardous portents (i.e., heavy footsteps, sudden knee movements, sudden swaying, abrupt body reflexes, imbalance, the catching of dropped tiles, and unsteady footsteps) that are likely to lead to a fall by classifying data from accelerometers attached to the waist, chest, arm, and hand of the target person performing tiling tasks.

Action Classification

In general, two steps- feature extraction and action classification—are required to classify an action of a 3D human skeleton model. In the vision-based approach, feature extraction converts raw data into a human skeleton and filters local parts (e.g., the right arm) or characteristics (e.g., the Euler angle between the vectors of the back and right arm) that are essential for classifying actions based on the research interest (e.g., safety, ergonomics). Because the non-vision-based approach does not visualize the human skeleton, feature extraction is reduced to the filtering of important features in raw data from sensors (e.g. y-axis acceleration value of an accelerometer). In the vision-based approach, several methods can be used to extract shapes and temporal motions from images and include classifier tools (e.g., support vector machines (SVMs)), temporal state-space models (e.g., hidden Markov models), conditional random fields, and detection-based methods (e.g., bag-of-words coding) [13]. Other pattern recognition methods or predefined rules and evaluation matrices may also be appropriate for use in classification.

Feature extraction involves subtracting the background of an image, identifying the human, and estimating the locations of the human's skeletal joints. Some researchers such as Ray and Teizer [21] have developed their own feature extraction algorithms. The software development kit provided by Microsoft for Kinect™ includes functions that may be directly used by a developer to obtain information about the human skeleton model. Dzeng et al. [14] used Kinect™ to identify the awkward postures of a worker during typical construction tasks such as the lifting and moving of materials and hammering of nails. Favorable identification accuracy was obtained if the camera had a front view of the observed human's targeted body parts and the view was not obstructed; the extraction algorithm performed poorly when a side-view camera was used. Action classification is a pattern recognition process that converts the information of extracted features into an action category allocation for the desired aspect.

For example, Ray and Teizer [21] investigated the ergonomics of the tasks of "overhead work," "squat or sit to lift a load," "stoop or bend to lift a load," and "crawl" and established computerized rules for determining if a particular posture is ergonomically correct. Instead of constructing in-house rules, Dzeng et al. [14] determined if a posture was awkward using the Ovako working posture analysis system [22], which is a postural observational method commonly used to identify postures that cause discomfort and are detrimental to workers’ health at worksites based on the local postures of major body parts (i.e., back, forearms, and legs) and the force exerted or load carried during work. Similarly, Han and Lee [9] used ViconTM to monitor a worker on a ladder, created a 3D skeletal model of the worker, and identified unsafe actions such as reaching too far to the side of the ladder based on pattern recognition. Han et al. [1] employed a one-class SVM with Gaussian kernel to define a classifier based on similarity features for identifying unsafe actions in the context of the ladder-climbing task.

Akhavian and Behzadan[23] used several machine learning algorithms (i.e., a neural network, decision trees, K-nearest neighbor, logistic regression, and an SVM) to automatically categorize three types of construction tasks (sawing, hammering + turning a wrench, and loading + hauling + unloading + returning) performed by students based on the data collected from the built-in accelerometers and gyroscope sensors of arm-attached smartphones. Rather than using conventional physical skeletal approaches, Chen et al. [24] represented motion data as highorder tensors (i.e., a higher order generalization of vectors and matrices) based on rotation matrices and Euler angles. Without reconstructing 3D skeletons, the tensor translated different types of awkward postures as high-dimensional matrices to represent complicated temporal postures as simple tensors for improving data processing efficiency. The system further applied dimension reduction process based on canonical polyadic decomposition to extract features from raw motion tensors, and multiclass SVM classification was employed to increase the accuracy and decrease the camputation power and memory required.

Application

Recognizing the actions of individual construction workers may help to identify potentially risky actions in their daily work that may cause accumulative injuries such as WMSDs or accidental injuries such as falls. Automating the recognition and monitoring of worker's actions may also help identify non-value-adding activities and improve productivity. This section reviews three major applications of human action recognition technologies.

Ergonomics

Ergonomic analysis in the construction industry has many aspects and may require monitoring of the physiological status (e.g., heart rate or body temperature), pose, posture, or motion of a worker. The applications may include tracking of workers' ergonomic status at a jobsite or a system that improves workers' posture through training in a mock-up environment. Seoet al. [8] focused on automating the conversion of motion data into available data in existing biomechanical analysis models to identify risks of WMSDs during lifting tasks. Dzeng et al. [14] used Kinect™ to video-record and monitor the individual postures of workers during several construction tasks and identify the postures that may lead to WMSDs.

Ray and Teizer[21] used Kinect™ cameras for real-time monitoring of a worker in motion and employed an Open NI middleware to determine the variation in angles between the various segments of the human body and whether body posture is ergonomically correct based on a predefined set of rules. Valero et al.[25] constructed a system that detects the ergonomically hazardous postures of construction workers based on motion data acquired from wearable wireless inertial measurement sensors (i.e., accelerometer, gyroscope, and magnetometer) integrated in a body area network with components for wireless transmission, built-in storage, and power supply. The system targets the lower back and legs and counts ergonomically hazardous motions during the tasks of squatting and stooping.

Personal Safety

Workers' safety is essential for the success of a construction project. Several factors affect jobsite safety, and some examples are safety education, safety management, the safety of the working environment, and the use of safety equipment such as nets, cables, and helmets to protect workers from injuries. In addition to the aspects already mentioned, monitoring of the behavior and physiological or mental status of a worker is useful for reducing work accident rates. To improve worker safety at jobsites, Han & Lee [9] used ViconTM to visually monitor a worker on a ladder and create a 3D skeletal model of the worker; using this model, the system identified unsafe actions such as reaching too far to the side while on a ladder.

Fang and Dzeng [20] developed an algorithm to detect hazardous portents that might trigger falls based on accelerometer data collected from sensors attached to the safety vest of construction workers. Wang et al. [26] used a commercial wireless and wearable electroencephalography system, preinstalled in a helmet, to monitor workers' attention and vigilance state to surrounding hazards while they performed certain construction activities. Yanet al.[27] employed a wearable inertial measurement unit attached to a subject's ankle to collect kinematic gait data and discovered a strong correlation with the existence of installed fall hazards, such as obstacles and slippery surfaces, suggesting the usefulness of such devices and workers' abnormal gait responses to reveal safety hazards in construction environments. Kurienet al. [28] envisions a future jobsite where robotic construction workers exist to perform hazardous jobs and developed a system that tracks both a worker's body and hand motions to control a virtual robotic worker in a virtual reality world built using Unity 3D.

Productivity

Akhavian and Behzadan [29] automated activity-level productivity measurement within the construction engineering and management context based on accelerometer data collected from smartphones and processed using machine learning classification algorithms to recognize the idle or busy state of workers. They [30,31] also developed a machine-learning-based framework to extract the durations of activities performed by construction workers from wearable sensors (i.e., accelerometer and gyroscope) to increase the accuracy of activity duration estimation in a simulation model. Several classifiers were investigated: a feedforward back-propagation artificial neural network, an SVM, K-nearest neighbors, logistic regression, and decision trees. Similarly, Cheng et al. [4] used a data fusion approach to integrate ultra-wideband and physiological status monitoring data for facilitating real-time productivity assessment.

Some other productivity-related studies involved recognition of project equipment and resource activity rather than human activity. For example, Gong & Caldas [32] developed a video interpretation model to automatically convert videos of construction operations into productivity information by tracking and recognizing project resource operations as well as work states for productivity management. Kim et al. [33] used both accelerometers and gyroscopes to classify daily activities into the basic classes of sitting, walking up and downstairs, biking, and being stationary to estimate energy expenditure in a building environment.

Conclusion

This review of the literature related to human action recognition has revealed its proliferation and fast advancement in the construction research community over the last decade. To overcome problems such as occlusions and strong outdoor lighting, which are caused by the dynamic and complex nature of the construction environment, non-vision-based approaches to recognizing human action have gained considerable attention. In addition to tracking human action, research has also been conducted into the tracking of construction resources for analyzing productivity or performing simulations. We believe that the technology will soon be applied to other areas such as material storage monitoring or improving site layout. Additionally, brain sensors have been used to explore the mental state of construction workers. As brain sensors continue to become cheaper and as better dry-type noncontact electroencephalography sensors are developed, the application of brain sensors in construction fields is becoming more feasible. We certainly expect more researchers to exploit this technology in the near future.

Acknowledgment

The authors like to thank the Ministry of Science and Technology of Taiwan, which financially supported this research under contract 105-2221-E-009 -022 -MY3.

References

- SU Han, SH Lee, F Pena Mora (2014) Comparative study of motion features for similarity-based modeling and classification of unsafe actions in construction. Journal of Computing in Civil Engineering 28(5): A4014005.

- L Joshua K, Varghese (2011) Accelerometer-based activity recognition in construction. Journal of Computing in Civil Engineering 25(5): 370-379.

- S Pellegrini, LIocchi (2008) Human posture tracking and classification through stereo vision and 3D model matching. EURASIP Journal on Image and Video Processing: 476151.

- T Cheng, M Venugopal, J Teizer, P Vela (2011) Performance evaluation of ultra wide band technology for construction resource location tracking in harsh environments. Automation in Construction 20(8): 1173-1184.

- A Sharma, M Agarwal, A Sharma, P Dhuria (2013) Motion capture process, techniques and applications. International Journal on Recent and Innovation Trends in Computing and Communication 1(4): 251-257.

- K Aminian, B Najafi (2004) Capturing human motion using body-fixed sensors: Outdoor measurement and clinical applications. Computer Animation and Virtual Worlds 15(2): 79-94.

- AD Wiles, DG Thompson, DD Frantz (2004) Accuracy assessment and interpretation for optical tracking systems. Proceedings of SPIE Medical Imaging 2004: Visualization, Image-Guided Procedures, and Display : 421-432.

- JO Seo, R Starbuck, SUk Han, SH yun Lee, TJ Armstrong (2015) Motion data-driven biomechanical analysis during construction tasks on sites. Journal of Computing in Civil Engineering 29(4): B4014005.

- S Han, S Lee (2013) Avision-based motion capture and recognition framework for behavior-based safety management. Automation in Construction 35: 131-141.

- A Khosrowpour, JC Niebles, M Golparvar Fard (2014) Vision-based workface assessment using depth images for activity analysis of interior construction operations. Automation in Construction 48:74-87.

- M Liu, SU Han, SH Lee (2016) Tracking-based 3D human skeleton extraction from stereo video camera toward an on-site safety and ergonomic analysis. Construction Innovation 16(3): 348-367.

- R Gonsalves, J Teizer (2009) Human motion Analysis using 3D range imaging technology. Proceedings of the 26th International Symposium on Automation and Robotics in Construction, Austin, Texas, USA.

- L Ding, W Fang, H Luo, P Lovec, B Zhonga, et al. (2018) A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Automation in Construction 86: 118-124.

- RJ Dzeng, HH Hsueh, CW Ho (2017) Automated posture assessment for construction workers. Proceedings of the 40th Jubilee International Convention on Information and Communication Technology, Electronics and Microelectronics, Opatija, Croatia.

- M Uiterwal, EBC Glerum, HJ Busser, RC van Lummel (1998) Ambulatory monitoring of physical activity in working situations, a validation study Journal of Medical Engineering Technology 22(4):168-172.

- J Parkka, M Ermes, P Korpipaa, J Mantyjarvi, J Peltola, et al. (2006) Activity classification using realistic data from wearable sensors. IEEE Transactions on Information Technology in Biomedicine 10(1): 119-128.

- DM Karantonis, MR Narayanan, M Mathie, NH Lovell, BG Celler (2006) Implementation of a real-time human movement classifier using a triaxial accelerometer for ambulatory monitoring. IEEE Transactions on Information Technology in Biomedicine 10(1): 156-167.

- J Lester, T Choudhury, G Borriello (2006) A practical approach to recognizing physical activities. Proceedings of Pervasive 2006 (LNCS 3968), Springer-Verlag, Berlin, Germany, p. 1-17.

- M Ermes, J Parkka, J Mantyjarvi, I Korhonen (2008) Detection of daily activities and sports with wearable sensors in controlled and uncontrolled conditions. IEEE Transactions on Information Technology in Biomedicine 12(1): 20-26.

- YC Fang, RJ Dzeng (2017) Accelerometer-based fall-portent detection algorithm for construction tiling operation. Automation in Construction 84: 214-230.

- SJ Ray, J Teizer (2012) Real-time construction worker posture analysis for ergonomics training. Advanced Engineering Informatics 26(2): 439455.

- O Karhu, P Kansi, I Kuorinka (1977) Correcting working postures in industry: a practical method for analysis. Applied Ergonomics 8(4): 199201.

- R Akhavian, AH Behzadan (2016) Smartphone-based construction worker's activity recognition and classification. Automation in Construction 71(2): 198-209.

- J Chen, J Qiu, C Ahn (2017) Construction worker’s awkward posture recognition through supervised motion tensor decomposition. Automation in Construction 77: 67-81.

- E Valero, A Sivanathan, F Bosche, M Abdel Wahab (2016) Musculoskeletal disorders in construction: A review and a novel system for activity tracking with body area network. Applied Ergonomics 54: 120-130.

- D Wang, JC, D Zhao, F Dai, C Zheng, X Wu (2017) Monitoring worker's attention and vigilance in construction activities through a wireless and wearable electroencephalography system. Automation in Construction 82: 122-137.

- X Yan, H Li, AR Li, H Zhang (2017) Wearable IMU-based real-time motion warning system for construction workers' musculoskeletal disorders prevention. Automation in Construction 74: 2-11.

- M Kuriena, MK Kimb, M Kopsidaa, I Brilakisa (2018) Real-time simulation of construction workers using combined human body and hand tracking for robotic construction worker system. Automation in Construction 86: 125-137.

- R Akhavian, AH Behzadan (2016) Productivity analysis of construction worker activities using smartphone sensors. Proceedings of the 16th International Conference on Computing in Civil and Building Engineering (ICCCBE): 1067-1074.

- R Akhavian, AH Behzadan (2015) Construction equipment activity recognition for simulation input modeling using mobile sensors and machine learning classifiers. Advanced Engineering Informatics 29(4): 867-877.

- R Akhavian, AH Behzadan (2018) Coupling human activity recognition and wearable sensors for data-driven construction simulation. Journal of Information Technology in Construction (ITcon) 23: 1-15.

- J Gong, CH Caldas (2010) Computer vision-based video interpretation model for automated productivity analysis of construction operations. Journal of Computing in Civil Engineering 24(3): 252-263.

- TS Kim, JH Cho, JT Kim (2013) Mobile motion sensor-based human activity recognition and energy expenditure estimation in building environments. Sustainability in Energy and Buildings, Proceedings of the 4th International Conference on Sustainability in Energy and Buildings, Springer, pp. 987-993.

Top Editors

-

Mark E Smith

Bio chemistry

University of Texas Medical Branch, USA -

Lawrence A Presley

Department of Criminal Justice

Liberty University, USA -

Thomas W Miller

Department of Psychiatry

University of Kentucky, USA -

Gjumrakch Aliev

Department of Medicine

Gally International Biomedical Research & Consulting LLC, USA -

Christopher Bryant

Department of Urbanisation and Agricultural

Montreal university, USA -

Robert William Frare

Oral & Maxillofacial Pathology

New York University, USA -

Rudolph Modesto Navari

Gastroenterology and Hepatology

University of Alabama, UK -

Andrew Hague

Department of Medicine

Universities of Bradford, UK -

George Gregory Buttigieg

Maltese College of Obstetrics and Gynaecology, Europe -

Chen-Hsiung Yeh

Oncology

Circulogene Theranostics, England -

.png)

Emilio Bucio-Carrillo

Radiation Chemistry

National University of Mexico, USA -

.jpg)

Casey J Grenier

Analytical Chemistry

Wentworth Institute of Technology, USA -

Hany Atalah

Minimally Invasive Surgery

Mercer University school of Medicine, USA -

Abu-Hussein Muhamad

Pediatric Dentistry

University of Athens , Greece

The annual scholar awards from Lupine Publishers honor a selected number Read More...